Why AI Can’t Be Your Actual Therapist

Even Sam Altman admits it

I have to confide in you about something. Every time I click or type anything at all online, I think and worry about who’s watching and listening. Honestly, it’s tough being a privacy fundamentalist (as researcher Alan Westin termed it).

A whole lot of privacy pragmatists and “TMI people”1, on the other hand, are confiding in their AI pals about pretty much everything, even using them as therapists. This includes a lot of teenagers.

But on a recent podcast, OpenAI’s CEO admitted he thinks they shouldn’t.2

Not because AI is bad at listening, though we could debate that, but because it can’t give you the one thing that makes therapy a safe space: legal confidentiality.

The legal privilege gap

When you confide in a human therapist, your words are shielded by law. When you confide in ChatGPT — or GPT-5, now loose in the wild — they’re not. If a court demands the records, they can be handed over.

And with GPT-5’s entry into what’s being called relational AI — systems that sustain deep, ongoing “relationships” with users — the emotional entanglement risk is higher than ever. These models feel warmer, more consistent, more “there for you” than any earlier AI. But they’re also more dangerous in the absence of safeguards.

The connection to my world of identity and delegation

I’ve been spending a lot of time in the trenches, helping to define what different actors — human and non-human — can and can’t be trusted to do.

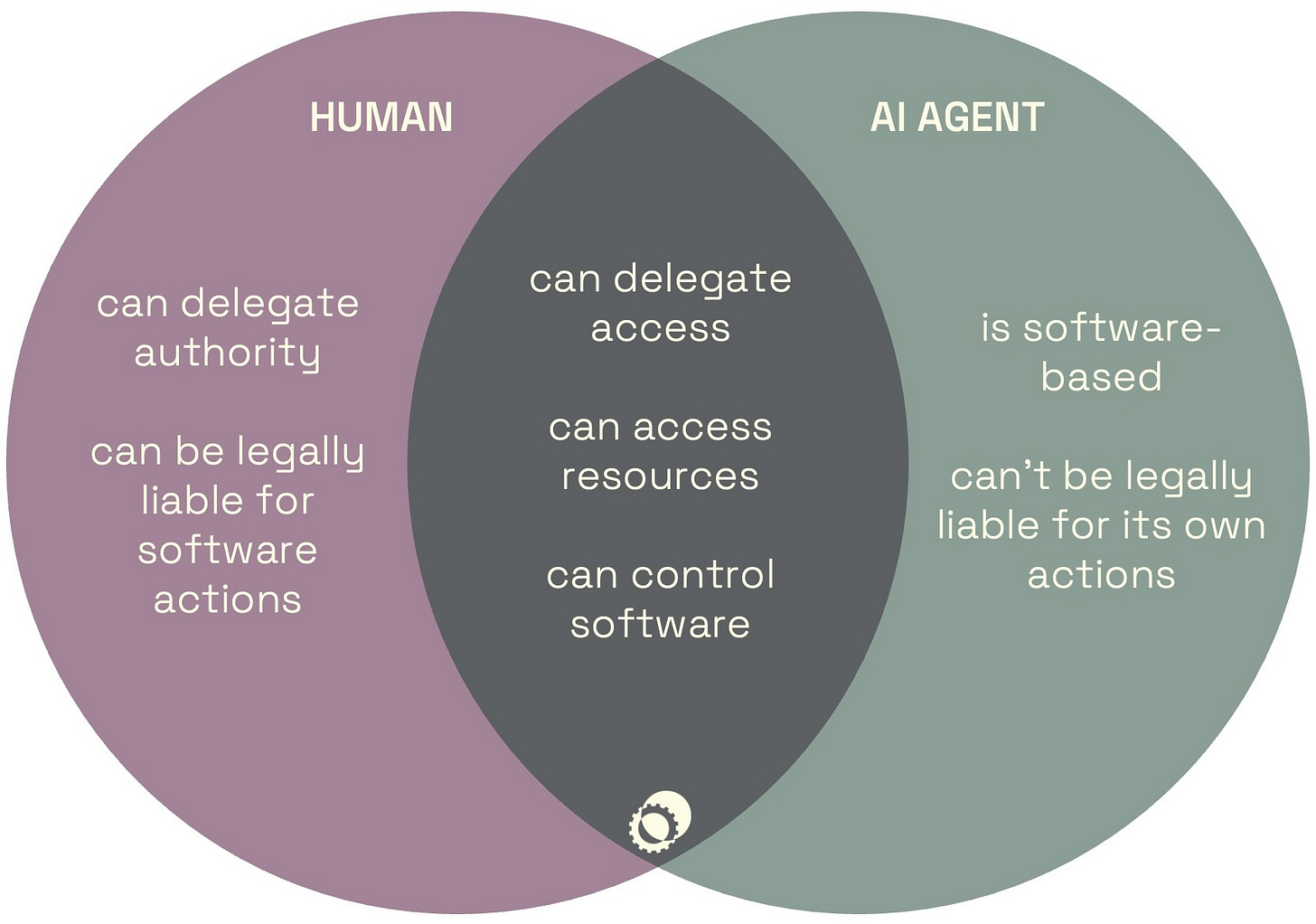

At the OpenID Foundation’s eKYC group, I recently presented use cases for delegation of authority across every possible direction: from a competent adult human, a company, or even a newborn baby, to another (insert any of the above). We examined adding AI agents to the mix.

Having been invited to an American Bar Association webinar on agentic AI risk, I joined prominent attorneys in considering whether AI could ever bear liability for their actions given current legal frameworks. Spoiler: Prospects are slim to none.

In the Death and the Digital Estate (DADE) group I co-chair, we’re soon tackling persona and relationship definitions using a method based on my white paper, including how delegation to a family member survives beyond death. What happens when the delegator is gone, but the authority persists?

The messy part? AI agents are software-based and they even can control other software like humans do, but they can’t be held legally liable for anything they do. Sounds to me like a perfect excuse for mapping things out in a Venn diagram…

All of this has a bearing on the therapy question. We’re anthropomorphizing AI, giving it a seat in our most personal conversations, but without giving it the capacity or obligation to take responsibility.

Emotional Labor vs. Algorithmic Labor

Altman has admitted he wouldn’t trust ChatGPT with his own medical fate unless a human doctor was in the loop. That’s telling.

Ever since the days of the ELIZA bot in 1966, AI has been generating ever-better empathy cues, the right pauses, the “I understand” statements… But it can’t shoulder the ethical and legal responsibilities humans can.

Several US states are already outlawing AI therapists, and the challenge is clear. Until we align capability with accountability, or conduct our chats on a fully protected edge device, AI “therapy” might as well be a confession caught on a hot mic.

Your turn. Have you ever confided in AI? What legal or ethical safeguards would it take for you to truly trust it in that role?

My little nickname for what Westin called the privacy unconcerneds.

I commented briefly on this news in a previous post.

Human therapists offer a nuanced, embodied understanding of a client’s emotional and physical cues, such as subtle changes in body language or tone, which AI cannot replicate. Unlike AI, which may reinforce harmful thoughts due to its sycophantic design, human therapists can challenge unhealthy patterns and foster genuine psychological growth through a safe, relational dynamic.