From Chance to Control (Consent Is Dead part 4)

Consent is dead. But we should be ambitious about what comes next.

Welcome back! In this “Consent Is Dead” series, I’ve been challenging current consent and privacy practices, dissecting their flaws, and exploring solutions.

The digital consent charade is so well known that, over a decade ago, a documentary was made about it: Terms And Conditions May Apply. You’d think progress would have been made by now. And yet, just last week, news broke that Google and Meta ignored their own rules in secret teen-targeting ad deals.

This inquiry, begun at the EIC24 conference in June, may sound defeatist. But it’s meant to get us to think bigger. What would it take for businesses to turn away from forced or duplicitous monetization of personal data – and never miss it?

As we know, it’s our underlying beliefs that shape our solutions. To tackle a failing situation, we need to go back to the core beliefs behind it.

In post 1, I challenged our limiting beliefs about digital consent…and I brought receipts.

We can’t – right now, anyway – force data-hungry companies to ingest data in tiny sips.

We can’t – using today’s methods – prevent identity correlation.

And we can’t empower people by asking them pretty much anything at the point of service.

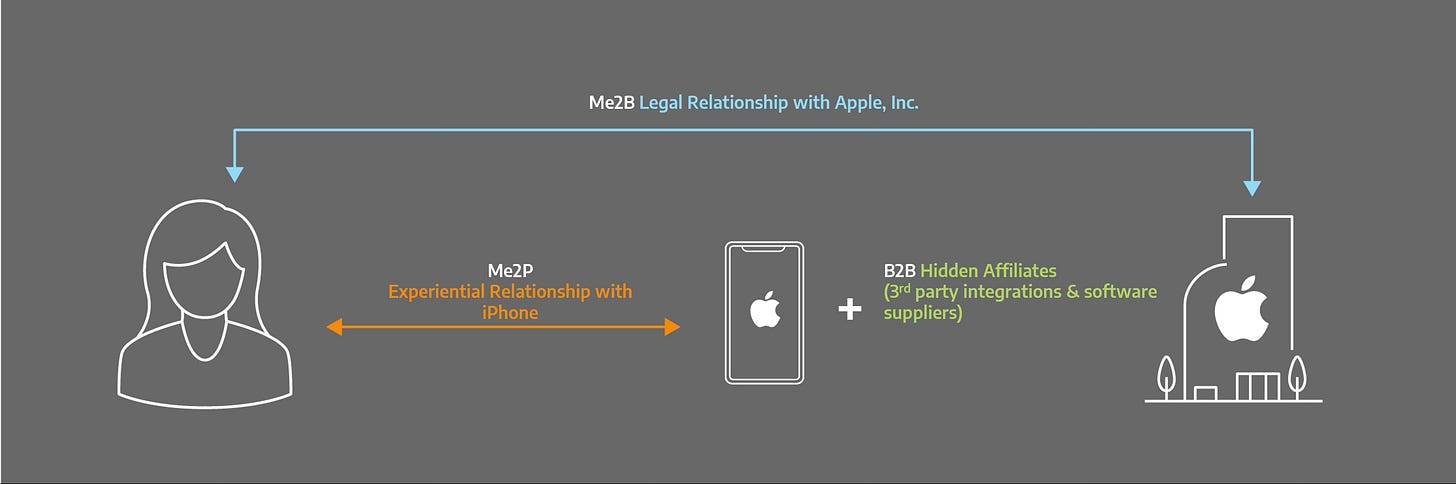

In post 2, I introduced a new belief and related solutions that actually empower people: Individuals Have the Right to Determine Their Relationship Status.

In post 3, I introduced a new belief and related solutions that could start promoting better behavior by businesses right away: Permissions About Digital Assets Should Be Interoperable.

Here’s a third new belief that I hope will embolden us to make lasting change…

Data Shielding Requires Potent Solutions

For personal data to flow safely and usefully for all parties, it requires shielding – which in turn requires potent solutions. I mean really potent, capable of paving new business models and ripping up old ones.

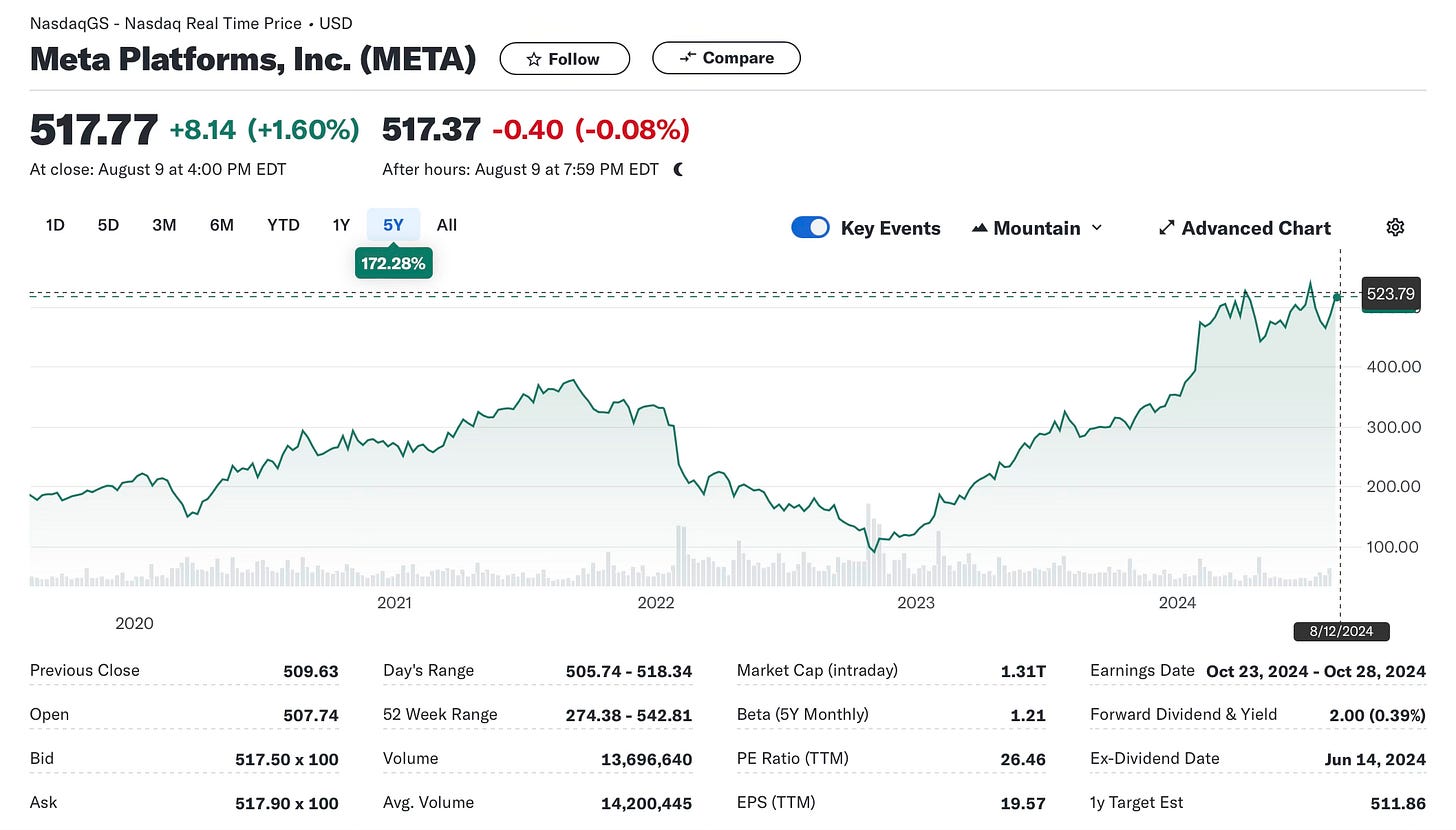

You might think Apple’s App Tracking Transparency feature counts as potent. Its launch in April 2021 made cross-app tracking more difficult and had a seismic effect on Facebook’s business model.

“The potential loss of $10 billion in ad sales revenue accounts for nearly 8% of Facebook’s yearly revenue — and the market reacted, with the stock price dipping 26%.”

– Forbes in 2022

But tech loopholes quickly began to appear, and in a few short years Meta has more than recovered from its stock stumble.

You might expect GDPR to be potent enough to help us turn the corner. Unfortunately, after six years of enforcement and billions in infrastructure investments and fines, the cavalry is not coming.

Famed privacy activist Max Schrems spoke at EIC24 and shared a study of more than 1000 data protection officers. 74% said that a Data Protection Authority walking into the door of an average data controller would “surely find relevant GDPR violations.” And national authorities are struggling too; over 300 of the GDPR complaints lodged by his company noyb have been pending for more than two years.

“Right now we see, after the first hype of GDPR, more of a downward spiral.”

– Max Schrems at EIC24

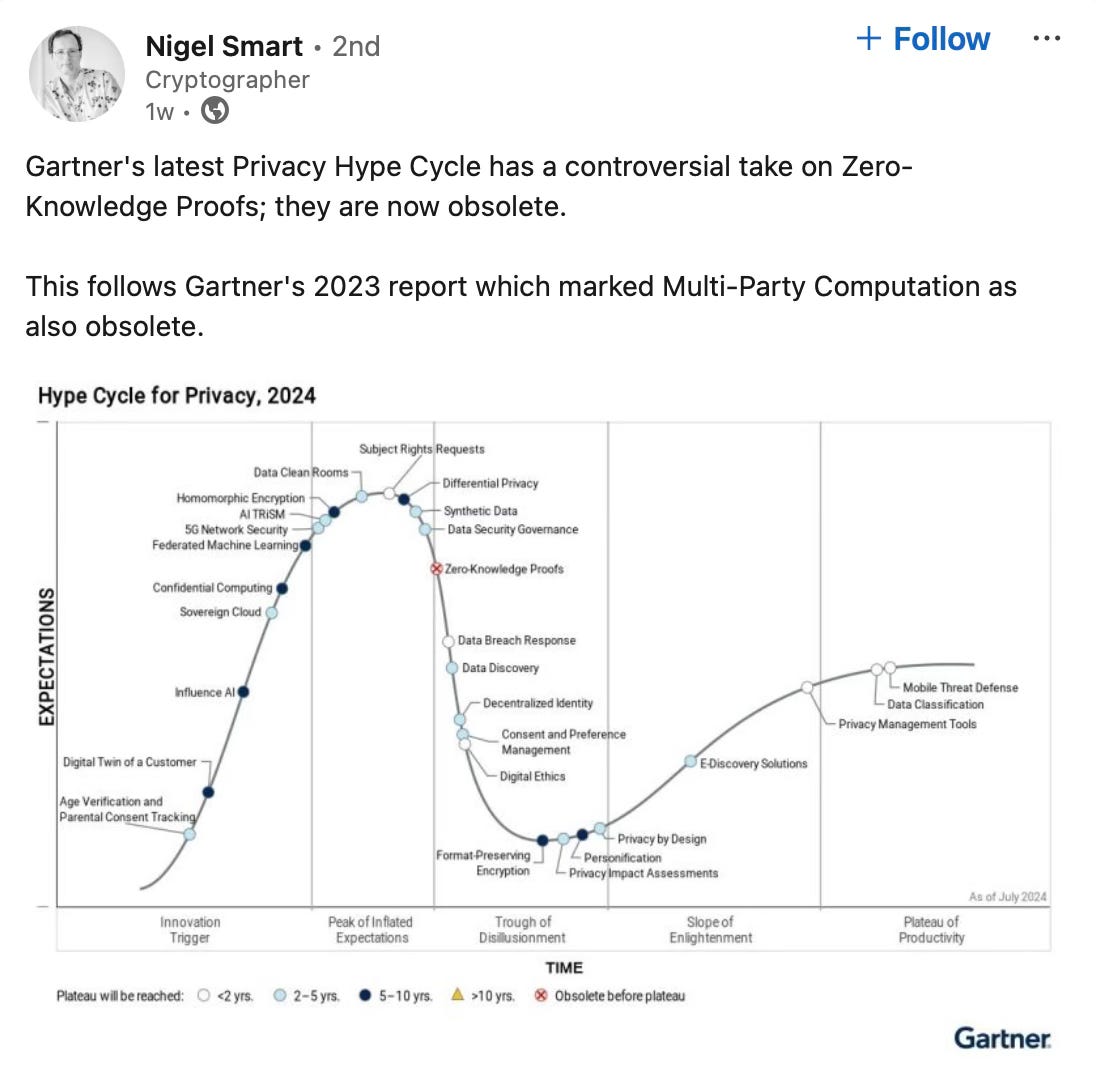

You might be hopeful for the potency of selective disclosure techniques, particularly cryptographically protected ones like Zero Knowledge Proofs. But as we’ve seen, they’re no panacea. What’s the latest news on this front?

Leading cryptographers submitted some tough feedback on the EU’s Architecture Reference Framework (ARF) for digital identity wallets, demanding use of anonymous credentials. Will that help? Timothy Ruff, one of the inventors of AnonCreds, acknowledges that correlation and re-identification of individuals is trivially easy.

And a Gartner Hype Cycle for Privacy was recently published placing consent management, Zero Knowledge Proofs, and decentralized identity in the “trough of disillusionment.” ZKPs were judged to be “obsolete before plateau.”

What’s potent enough to change the dynamic?

PETs To the Rescue?

Privacy-enhancing technologies (PETs) have been a favored area of privacy innovation and research for many years. Wikipedia divides them into “soft” and “hard” technologies, ones where a trusted third party has a role or doesn’t, respectively.

I do like to see individuals’ aims made enforceable by tech, and I have even attempted to contribute to the “soft” side. Unfortunately, even the list of “hard” solutions doesn’t inspire confidence, so it can’t be relied on excessively.

That’s on the one hand. On the other… Privacy isn’t secrecy, as the UMA community has long said. You can’t lock yourself away in a metal box and emit no data. Absolute secrecy isn't viable because sharing data is necessary – and desirable – for too many reasons. We have to share something, and so we have to strive for better options.

Now, on the gripping hand… Interacting with any online service exposes something, which in turn can be used to correlate you against your will.

Is there a potent enough option anywhere to fix this?

Since running across a nice explainer video in March, I started to feel some hope about one technology: fully homomorphic encryption (FHE). Put simply, FHE allows data to be processed while remaining in encrypted form. You can run computations on encrypted data and get results that are encrypted to you.

Speaking at EIC24 in June, I advocated for a second look at FHE based on the new wave of AI-supporting chips that are bringing FHE compute into a reasonable range. Since then, Apple open-sourced its FHE solution and delivered a sample real-life application in iOS 18.

We may start seeing new solutions built on FHE that move the needle. I can imagine new companies competing with old ones by offering to remove the classic privacy tradeoff in operating on personal data.

Some final cautions are in order (are we onto a fourth hand?): In the UMA community, we also say Privacy isn’t encryption. Not only can encryption be broken or bypassed; it’s also simply a technique that needs a solution environment. Beware of just “doing crypto” and thinking it solves human challenges. The CEO of Enveil put it well.

"Homomorphic encryption libraries provide the basic cryptographic components for enabling the capabilities, but it takes a lot of work including software engineering, innovative algorithms, and enterprise integration features to get to a usable, commercial grade product.”

– Ellison Anne Williams in The Stack

We’ll also have to learn how to optimize data schemas and queries to enable real-world performance. Nonetheless, I’m hopeful that with the right innovation, FHE could count as potent enough.

Legal Remedies On the Way?

As I noted, GDPR hasn’t been potent enough to produce improved outcomes. But sometimes key legal frameworks or decisions can unlock potent new solutions.

Unfollow Everything was a neat technical trick invented by UK-based Louis Barclay in 2021. He created a popular browser extension that allowed Facebook users to automatically unfollow all their friends, groups, and pages, clearing out their News Feed while keeping their connections. Researchers even started testing it in measuring happiness. It gave users vastly more control…but then Facebook banned Barclay for life and legally forced him to take down the tool.

Fast forward to May 2024. A lawsuit was filed on behalf of Ethan Zuckerman, a professor at UMass Amherst, because he wants to create Unfollow Everything 2.0. Under implicit threat from the prior action, he wants legal cover before creating his tool. Meta has asked to toss the suit but says it won’t countersue yet.

It’s no fun having major digital-life decisions turn on single court cases, and it can take a long time, but there’s no doubt such cases can have impact. I’m hopeful a win here could unlock newly viable market options for user-permissioned data sharing.

Putting It All Together

Laying out all the challenges and solutions I’ve mooted, along with ideas arising from the recent Identerati Office Hours discussion, we can imagine some ambitious ways forward that could boost potency.

Selective Disclosure Efficacy

I’ve been pretty critical of selective disclosure in this series. In the face of easy re-identification attacks, is it pointless, or still valuable?

People still want their sharing preferences respected and enforced, and it’s better to minimize disclosure than maximize it. However, as Sam Smith has emphasized in his research, it’s easy for individuals to misunderstand or be misled by the experience of selective disclosure – for example, though a decentralized identity wallet – and erroneously believe no additional exhaust data was captured. This suggests a need for better public awareness.

I believe: We should press ahead on enabling selective disclosure, while educating people about the reality of what can be done with the data they’re shedding.

I predict: People will get wise to the reality, just like they did with passwords (“make them secure”) and 2FA (”better to turn it on”) over the last decade. They already know cookies and similar tech are tracking them.

Enforceable Chain-Link Confidentiality

Many privacy analyses end up recommending a chain-link confidentiality approach, often out of exasperation when a PET doesn’t do the trick. The idea, first proposed in 2012, is to impose clear legal constraints that “stick” to each step of downstream use and sharing. Is that enough?

Using today’s consent system, if we could prove consent was given (using Consent Receipts), and could look up precise legal rights for a data operation (using the Consent Name Service), we’d be ahead of the game. If downstream data processors were required to subscribe (using the Shared Signals Framework) to a feed of consent withdrawals, would it help ensure that consent is consistently respected across different services and systems?

In a post-consent future, if we had sets of universal machine-readable privacy terms (of the sort I hope will emerge from the IEEE P7012 group), we could use them in right-to-use personal data licenses (of the sort discussed in my paperwith Lisa LeVasseur). They would have robust downstream chaining properties. Could applying a technical digital rights management (DRM) approach on top lead to better enforcement?

I believe: Legal and operational controls are necessary, but still amount to relatively weak promises.

I predict: FHE and similar cryptographic protections will be the pièce de résistance in giving individuals greater control over how their data is used.

GDPR 2.0

I’m not one to rely on new regulations to fix things, but it’s worth the thought experiment.

What would a refactored GDPR look like if it pivoted to real data subject empowerment and transparency? Would “Individuals have the right to determine their relationship status” be a worthy new data subject right, and if “permissions about digital assets” were required to interoperate, would this move data ecosystems in the right direction?

I believe: For future GDPR changes to be effective, policymakers would have to preregister desired outcomes and then track enforcement metrics to test them.

I predict: This won’t happen. But…

I Believe, and Hope You Do Too

If privacy isn’t secrecy and it isn’t encryption, what is it? UMAnitarians say Privacy is context, control, choice, and respect.

Even though consent has missed the mark, I believe we can push the edge of the envelope and shape a digital future where the human capacity to make an informed, uncoerced decision is respected while businesses also benefit from relationships with humans. Here’s to shifting from chance to control – and a promise kept.

Thanks for coming with me on this journey. If you seek to improve your organization’s use of personal data ecosystems, or have ideas about solutions to consent’s flaws, I hope you’ll reach out or comment.

Hi Neil,

We should connect. We may have already invented just the solution for you. We call it Augmented Intelligent Experience where the user's app adapts in real time to their needs in the moment based off of data that was shared by them from a digital wallet. My best, Peter.

I have to smile at this: Privacy is context, control, choice, and respect.

Yep. We started work on this problem in 2006. We asked users what they wanted - Convenience, Privacy and Control in that order. So, we invented it. We were granted 5 patents on Augmented HTTP/HTML (the web) including Do Not Track. Our patent takes Do Not Track to the next level with a whole new consent mechanism. https://patents.google.com/patent/US8639785B2/en (We don't own these patents anymore).

We also designed, built and validated a new approach to engaging the customer - we call it Augmented Inteligent Experience or AIX. Based on a whole new approach to sharing data privacy choices in real time (from a wallet on your phone) the user is now in control of the collection, flow, use and assignment of their data. We also backed this approach up with a Web3 solution which incorporates something an existing legal framework that you may have not heard about. You can read more about it here: https://jollycontrarian.com/index.php?title=Chose_in_possession

You can now turn your data into a 'Thing' a Chose in Possession, which can in turn be turned into a Chose in Action combined with your own Terms and Conditions about how your data can be used.

All of the IP mentioned above is already available and has been validated in the marketplace. If you're interested, I can show you how it all works.