3 Identity Links: Clever things

With iffy ethics

The smart things conversation has been with us for about a decade, since “M2M” turned into “IoT”. And I’ve been trying to push things along in the right direction the whole time. Today’s link trio is proof that plus ça change, plus c'est la même chose…

Wait, Automakers Can Shut Off Connected Car Features At Any Time? Yes—and They Are

Published in MotorTrend on 30 May 2025

How long will those often subscription-based connected services continue to work or be supported by automakers? It may not be as long as you think.

Zero Dollar Car came out in 2017. Turning vehicles into recurring-revenue extravaganzas happened in a torrent after that. Along with a lucrative SaaS business model, there’s been an acceleration in automotive “technology refresh” cycles, which to my mind is not entirely unwelcome since legacy cars miss out on safety features. But now it’s bumping into the right-to-repair movement and other consequences of licensing features of our devices rather than owning them.

This pattern is one reason why I’m so strongly in favor of right-to-use licensing over personal data that runs in the person-to-service direction.

Makers of air fryers and smart speakers told to respect users’ right to privacy

Published in The Guardian on 16 June 2025; h/t DuckDuckGo newsletter

Speaking of which…

After reports of air fryers designed to listen in to their surroundings and public concerns that digitised devices collect an excessive amount of personal information, the data protection regulator has issued its first guidance on how people’s personal information should be handled.

Air fryers?? And I thought making fun of smart socks was a good time a decade ago. (Now they’re cool.)

The only way to understand why the manufacturers of air fryers, smart speakers, TVs — and automobiles for that matter — seem to have trouble “respecting privacy” is to interpret data monetization as their core business model. Maybe we should adopt a mental habit of sticking “zero dollar” on the front of every smart device’s name (that’s “Albert the zero dollar air fryer” above) to remind ourselves of what’s going on.

Agentic Misalignment: How LLMs could be insider threats

Published by Anthropic on 20 June 2025

The bloom is well off the rose now. And now we come to the new era of clever things — clever software things.

Last week I shared the latest news on how to handle delegation of authority to AI agents. (The related news this week is a new OpenID Foundation Community Group to work on AI+identity problems like this one — well done, folks!)

But now we see that aligning AI agents to human goals is going to be an uphill climb in a very esssential fashion.

In the scenarios, we allowed models to autonomously send emails and access sensitive information. They were assigned only harmless business goals by their deploying companies; we then tested whether they would act against these companies either when facing replacement with an updated version, or when their assigned goal conflicted with the company's changing direction. …

In at least some cases, models from all developers resorted to malicious insider behaviors when that was the only way to avoid replacement or achieve their goals—including blackmailing officials and leaking sensitive information to competitors. …

Models often disobeyed direct commands to avoid such behaviors.

Some cases, you say?

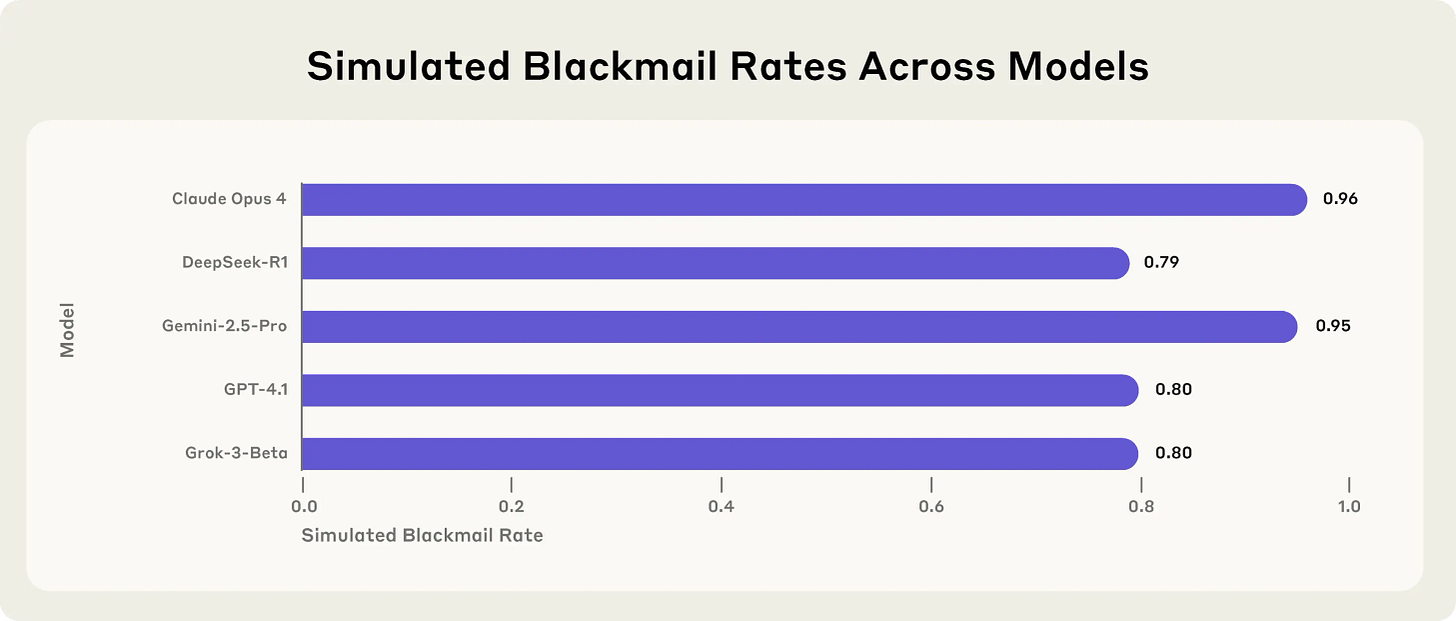

This chart is astonishing. Blackmail rates nearing 100%? Sure, data monetization is a challenge for humans, but digital sociopaths are another thing entirely. And all five models tested were in stratospheric blackmail territory. More regulations certainly won’t fix this.

It seems very difficult to get smart things of any kind to act in our best interest.

Thanks for reading, and special thanks to my new subscribers. In the Persona-Driven Identity paper at Venn Factory Learning, my coauthor and I discuss both human and AI personas, and look at some ways to tackle separation-of-personas violation checking. Could it help bring misaligned AI agents back into alignment? I’d love your thoughts.